- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Difference in results between Generalized RSquare and RSquare

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Difference in results between Generalized RSquare and RSquare

Hi everyone,

I tried to find an answer to my question in the community or in JMP help, but I couldn't. So, I would really appreciate if anyone could point me in the right direction here or let me know what is the basic concept I am missing.

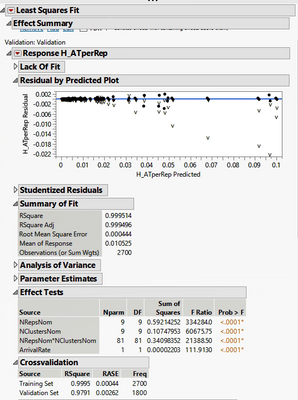

I am fitting a model to my response (dependent variable - DV), which is continuous, using 3 independent variables (IV) [2 categorical and 1 continuous] and the interaction between 2 of them (the other one I am just using the main effect). I first used Least Squares for that, as you can see in the screenshot below.

However, my residual by predicted plot does not look very good (I have some outliers). No matter which other factors I tried to add to the model - or transformation - I was not able to improve my residuals plot. The best I could get was using a change of my response, which is the one I am already showing above.

Next, I thought about using the Penalized Regression Method (more specifically Ridge) through the Generalized Regression. I read it can be more robust to outliers (though it will introduce some bias).

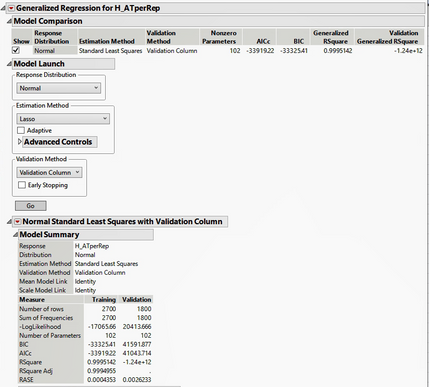

I started running the Generalized Regression with Standard Least Squares.

According to some videos I have seen, this should produce the same results as the Least Square regression.

Also, according to JMP help (https://www.jmp.com/support/help/en/15.2/index.shtml#page/jmp/training-and-validation-measures-of-fi...) the Generalized RSquare provided should be between 0 and 1 and it will simplify to the traditional RSquare for continuous normal responses in the standard least-squares setting. However, as you can see from the screenshot below, although the Generalized RSquare matches the RSquare for the Training set, it does not match for the Validation set (and it is negative). The other statistics (e.g. RASE) do match both sets (Training and Validation).

Any ideas about what may be happening here?

Thank you in advance for any ideas and hints!

PS: For everything I am doing in this post, I used the FitModel platform in JMP.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Difference in results between Generalized RSquare and RSquare

Hi @SDF1

Thank you so much for your reply and your insights. They are very helpful and they align with a few things I was thinking.

I will provide some extra information here in case you have more insights to give (I would really appreciate it).

1. The stratified response based on the validation columns do have (very) similar distributions. As I explained to Mark, the response was calculated based on computer experiments generated using different seeds (and I used the seeds to stratify into training and validation sets).

2. Let's assume I am overfitting, my main question is:

Why my RSquare for the training set is 0.9995 using LeastSquares directly from FitModel or through Generalized Regression, while my RSquare for the validation set is 0.9791 using LeastSquares directly from the FitModel and -1.24e+12 using Least Squares through Generalized Regression.

I do not understand the differences in the RSquare values for the validation set. Shouldn't the values be the same (even if there is overfitting)?

3. I do think I have reason to include the terms in my model. First, my response was calculated with different data based on the different number of replications (one categorical IV) and different "formula/data" based on the different number of clusters (second categorical IV). The known theoretical formula to calculate the response takes into consideration the arrival rate (continuous IV), because that value affects the data generated.

I am not sure how would I do bootstrapping in JMP. But I did try different models manually (with and without the terms) and I also tried using Forward Stepwise. In both cases, the results ended up showing the two categorical IV + their interaction + the continuous IV was the best model.

4. Your last point is actually something that I was hoping someone would bring to this discussion.

My response is not actually Normal (it is LogNormal, in fact).

So, I am going to adjust that when I try Ridge or ElasticNet.

But even if my response is not Normal, shouldn't the RSquare values for the Validation sets in both images I uploaded match?

Thank you so much for your insights!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Difference in results between Generalized RSquare and RSquare

Hi @AnnaPaula ,

I'm not sure I quite follow how you used the calculated seeds to generate stratification of the validation column. But, if you use the validation column platform in JMP Pro (which you have if you can access GenReg), then you can just stratify on the response (Y variable) you're fitting. If all your outliers are in the validation set, you might want to re-think how it generated the two sets.

Another point on this you might consider is splitting off entirely -- into another data table a test data set that is not used to fit the data. If you run multiple models on the training/validation set, you can then use the test data set to see which model is really the best at prediction, you can do this using the Model Comparison platform. Presumably, your goal is to have the best predictive capability, and you need to test that somehow. It looks like you have a large enough set you could do something like that. For example, make a validation column with, e.g. 60% train, 20% validation, 20% test. Subset the test data set into an entirely different table and then use the remaining train/validate subset to build models.

For your other questions about R^2 and it being negative or not quite matching up, I'd refer to what Mark said.

If your data is log-normal distributed, you'll want to select that option in the model specification, as that changes some of the underlying processes behind how it does the fitting.

When you have created a model in SLS or GenReg or PLS, you can bootstrap the Estimates by right clicking the Estimate column and selecting bootstrap. You'll want to run several thousand and then look at the distributions to see if the original Estimate for the coefficient of that term is close to a global mean of many estimations. JMP will run some calculations with slightly different starting points and therefore generate several different estimates, you can then see that distribution and determine if overall 1) the estimate from the first try was accurate, and 2) if the coefficient for the effect is really contributing a lot or not. For example, in the SLS platform, the effects are given a FDR LogWorth value to estimate the false discovery rate for that effect. If the value is >2 (the blue line), then there's significant evidence that the effect really does contribute to the model. On the other hand, it could be smaller, or near the value 2. If you bootstrap those FDR values, you can find the mean and range to determine if the effect is meaningful or not. I've had times where an effect looked like it could be borderline, and after bootstrapping the FDR, most of the time is was actually not crossing above 2 and was therefore not really a globally important factor in the model.

As a last note, I highly recommend running multiple different kinds of modeling platforms: boosted tree, bootstrap forest, NN, etc. to see if another platform might work best for your data/situation.

Hope this helps!,

DS

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Difference in results between Generalized RSquare and RSquare

Thank you!

I had no idea we were able to bootstrap the estimated in JMP. Thank you for teaching me that!

Thank you also for all the other insights. Very helpful!

- « Previous

-

- 1

- 2

- Next »

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us