- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: Classification problem

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Classification problem

Hi all, good new year 2021.

I'm working on a classification problem with a binary response called Group (o and 1 states) as reported in the attached xls file. Overall there are 13 predictors with 2 of them being categorical. This table is the result of cleaning, feature selection and clustering (to remove collinarity). Being the Group state 1 strongly unbalanced with respect to the 0 one I used the SMOTE+TOMEK addins to balance the response. The added rows are recognizable by the Source column. I ran several classification models whose results are in the attached ppt file, The NN is the one with highest R2 and lower misclassification error while the Naive bayes is the worst one with about 0.2 misclassification error. Since I'm afraid of a model with 0 miscalssification error (overfitting), which one in your opinio is the most reliable model (the one that once deployed will have the same misclassification error)?

Thanks Felice

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Classification problem

A few things that I noticed from my very quick look at your results.

First, this is a JMP community, so a JMP file would be preferable over an Excel file. After all, the first thing that I did is put the data into JMP so that I could see it! Plus, you could have saved your different models as scripts that could be re-run in order to see the options you tried.

I do not know how you implemented SMOTE+TOMEK to balance the responses, but there are other ways you could attempt to balance the responses. You could have oversampled, ignored some of the more prevalent cases, assign rows to training/validation/test based a stratified sampling of the response, etc. Regardless, if you are concerned by the "perfect" model, could these models be picking up on how the balancing was done? If an algorithm is doing the balancing, a model/algorithm just might be able to explain it.

Assuming that the balancing cannot affect the modeling effort, you had split your data into training, validation, and test. Your test set should be indicative of real-world performance, so I do not believe there is any over-fitting going on. The test set is not used at all in the fitting process. If you believe the model is too good, then make sure that your data (and especially the test set) is representative of your process.

There are 89 rows without a validation value. Why? Are you excluding those rows from your analysis? Why?

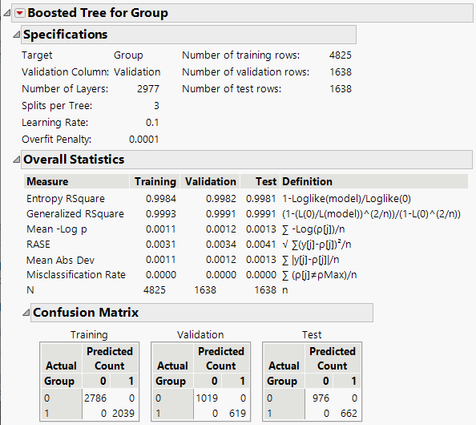

It looks like you used all of the default values for a boosted tree. Notice that a default boosted tree has 50 layers and your final results show that all 50 layers were used. That means that you can have a better tree by using more boosted layers. Here is a result when I specified a very high number of layers.

The support vector machine also provided a perfect fit according to misclassification rate. Why not consider that?

The only way to truly have confidence in a model is to start using it on new data. Why not go forward with all three (more layered boosted tree, neural net, and support vector machines) and let the data determine which one truly works better? Do you need to consider the speed of model evaluation in determining which one you use?

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Classification problem

Ciao Dan thanks for your mail.

About the xls file you are right. Next time I will share the jmp file instead of the excel one.

The Smoke+tomek addins is not impacting the model. With and without it the performance are exactly the same.

For some of models that I developed I used just the hyperparameters values proposed by JMP. You are right.

The 89 instances you are referring were not used to develop the model. I checked the model performance on these un seen 89 examples too (besides the test group). Also in this case the models confirmed the good performance seen on the training/test groups. Everything seems to be fine.

Maybe as you suggest the only possibility to be sure about the reliability of models is to do the deployment and check the performance on unseen data.

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us