See how to:

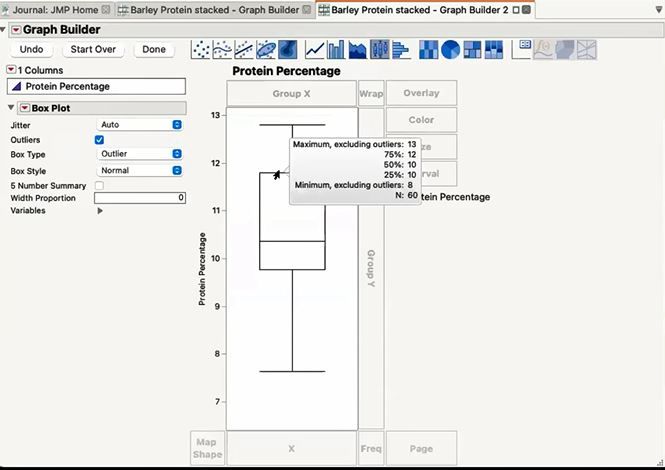

- Look at box plots and statistics, as originally proposed by Mary Eleanor Spear as a way to present information about populations of people

- Use Student's t-test, as first presented by Sealy Gosset, to determine if a mean is different from a target or if two means are statistically the same, for example, if grain samples from different lots have the same (acceptable) protein content as a target value

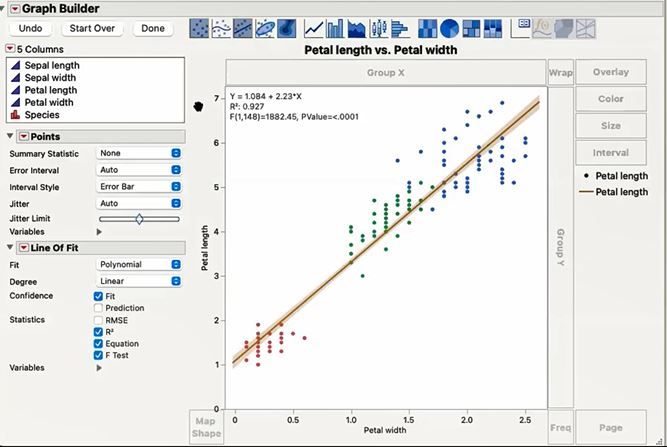

- Perform Exploratory Data Analysis (EDA), as codified and refined by John Tukey, to look at data that have potential relationships and examine some statistics that help understand the relationships

Barley Box PlotBarley Box Plot

Barley Box PlotBarley Box Plot

Petal Length vs WidthPetal Length vs Width

Petal Length vs WidthPetal Length vs Width

Resources