The content you are looking for has been archived. View related content below.

- JMP will suspend normal business operations for our Winter Holiday beginning on Wednesday, Dec. 24, 2025, at 5:00 p.m. ET (2:00 p.m. ET for JMP Accounts Receivable).

Regular business hours will resume at 9:00 a.m. EST on Friday, Jan. 2, 2026. - We’re retiring the File Exchange at the end of this year. The JMP Marketplace is now your destination for add-ins and extensions.

- Subscribe

- Mark as read

- Mark as new

Learn JMP Events

Events designed to further your knowledge and exploration of JMP.- JMP User Community

- :

- Learn JMP

- :

- Learn JMP Events

- :

- Identifying Important Variables

Identifying Important Variables

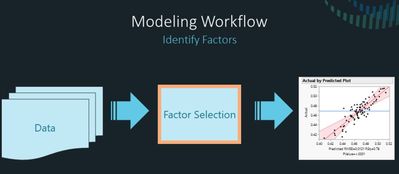

See how to use simple statistical techniques help identify important variables and interactions in your data as a precursor to building high performing prediction models and uncovering additional insights.

See how to:

- Understand prerequisites to consider before starting to ID important variables

- Clean-up and prepare data using Column Viewer

- ID and decide how to handle outliers and missing data using Explore Missing Values, Explore Outliers and Explore Patterns

- Ensure dataset is the best representation of the system you plan to study<

- Use Distributions in Column headers and Graph Builder to explore the data and visualize trends

- Perform Multivariate Analysis to ID correlated variables

- Use Response Screening to ID the most important variables that affect the response

- Use Predictor Screening (decision tree methods) to test many factors in predicting a response

- Use Multivariate Analysis to ID variables with high correlation

- Cluster variables to group correlated variables together and identifies the single variable that is most representative

- Use Fit Model>Response Screening and Stepwise Regression to find interactions and higher-order terms based on your important and unique variables

Questions answered by Scott @scott_allen and @Byron_JMP during the June, 2023 live webinar.

Q: How did you "un-select" while in the graph builder?

A: Click on the white space in the graph, not on a marker.

Q: Could you further elaborate on your explanation around P value and its rank? What does it mean its high vs low, what to expect when we see certain values or trends, etc.

A: For p-value and most statistics, consider . Also, when in JMP and you want help on a statistic (or anything else displayed). Select Tools > ? and click the area (in this case, p-value column header) that you want help with. Also, see this explanation.

Q: How is predictor screening platform different from random forest model?

A: In JMP we call Random Forest Bootstrap Forest (because Random Forest is trademarked). It’s the same thing. Predictor Screening does not include a model, only the ranking.

Q: Since the predictor screen isn’t telling you the percent variation is attributed to each individual predictor, is there any other platform that does just that?

A: The Predictor Screening Report shows the list of predictors with their respective contributions and rank. Predictors with the highest contributions are likely to be important in predicting Y. The Contribution column shows the contribution of each predictor to the Bootstrap Forest model. The Portion column in the report shows the percent contribution of each variable.

Q: Can multivariate analysis have absolute correlation numbers instead of -1 to 1?

A: Yes and no. The table is a matrix of correlation coefficients that summarizes the strength of the linear relationships between each pair of response (Y) variables. See multivariate methods.

Q: How would you select the most important variables if you had continuous, binomial (yes/no) and ordered (low, middle, high) independent variables?

A: Yes, you can use all these methods: Predictor Screening and Response Screening.

Q: Do you ever use stepwise regression? Does JMP offer all possible subsets regression?

A: See details on Stepwise Regression.

Q: A lot of my data contains multiple responses, and I find it limits some of things I can do in JMP. Do you do any special cleaning of multiple response data, or do you work with it as-is? I am referring to a "Multiple Response" column property (a list of multiple data points). I'm assuming it is easier to just break these out into two different columns but wanted to see if there is a better way.

A: Yes, we let you store multiple responses in one cell as an, but to analyze them, splitting them into different columns is correct.

Q: How do Tree-Based methods compare to PLS or PCA?

A: With PCA or PLS, we are trying to represent all the data with this smaller number of latent variables. You are trying to find a reduced number of dimensions that can represent all the data. That is different than tree-based methods, which help you nail down what actually explains something. Tree methods are supplanting PCA and PLS for building better. Tree-Based methods are fantastic for modeling data but are really sensitive to changes and they can over fit a lot. With the tree-based methods where you're taking a random sample of the rows and a random sample of the columns each time that you make a new tree, makes it really robust to outliers, and it's able to model like interactions and like quadratics or cubics, if they exist in the data. So, you don't have to make a bunch of assumptions.

Q: Can I use Predictor Screening and Response Screening for dependent variables that are numerical and my independent variables are continuous, binomial and ordered.

A: Yes.

See how to:

- Use JMP Partition to identify significant factors

- Accurately and automatically any model interaction effects

BONUS: Scott Allen @scott_allen and Byron Wingerd @Byron_JMP answered questions after the 2022 demo, too!

Q: Is there a tutorial video for the outlier tools?

A: This is a good one that covers the Explore Outliers tool in JMP. You can also work through the documentation and examples.

Q: Why wouldn't you get rid of the correlated variables first?

A: The presence of correlated variables often informs the modeling method. PLS for example, is more robust to correlated X’s. Also, the order may be a preference. You could do it both ways – predictor screening then clustering or vice versa. Be aware that clustering can be a bit inaccurate when you have lots of missing values or columns that don’t change (like an instrument setting). Sometimes additional data cleanup and preparation is needed if you want to start with clustering.

Q: How would you decide the prediction algorithm?

A: That’s a complicated question and the answer depends on your situation. Most of the time a linear model is going to be the most simple and informative. Other methods work around specific problems with the data, or analysis objectives.

Q: Why are the linear terms so strongly recommended? If my correlation is Y=X^2, why does JMP keep trying to add the X term?

A: This relates to effect heredity. Some of the time your higher order terms are significant but not the main effect. So, you want to keep in the main effect. For example, if you removed the main effect, then in the Prediction Filer you would not see the slope changing, you would just see the curvature and get not as good a prediction.

Q: For Predictor Screening, should the data of the factors and the responses be gathered in the same order (paired) or can randomly gathered data (unpaired) can be used.

A: Order doesn't really matter, but for Multivariate Analysis, we often like to throw in Ys in one group. The order that you add them to Y is the order it will be shown in the matrix.

Q: Would multi-linear regression analysis of x-factors (600 columns) help in finding which x columns are higher contributors to yield?

A: If you just do linear regression on 600 columns, you're really diluting what's explaining your Y. Tree methods like Partition work great for trying to nail down which of those 600 columns are getting you there. When Scott showed Predictor Screening, it took a random sample of the rows and columns and it builds a tree, does that 100 times and averages across those hundred trees to gives you the result. With Partition, you can force things to happen, you can force decisions to happen in different places or follow something as it is getting split. You could then look at the Variance Importance Report, or

the split history and R-Square changes. This example is too large to do that, but will give you and idea of how to use Partition.

Q: If the 600 separate linear regressions dilute the explanatory power of your model, is this why Response Screening employs the FDR (False Discovery Rate) correction in those summary statistics (that Scott showed and shorted ascending before) to try and compensate for this?

A: By chance alone you're going to find things that are really good, you want to try and filter those. We don't want to find things by chance, alone, so FDR helps correct for that. I emphasize HELPS, because no model is perfect. Likewise, all tree-based models, in situations where you have multiple linearity are best when first you try to separate out variance and find those variables that are really the most important instead of throwing everything into the model. Sometimes you can build ensemble models using JMP Pro.

Q: Are thre other methods for doing this with JMP Pro?

A; Yes, including using the XGBoost Add-In. See below.

Upcoming Events

-

Basic Data Analysis and Modeling

Mar 13JMP offers a variety of ways to interactively examine and model the relationship between an output variable (response) and one or more input variables... -

Getting Started with Graph Builder

Feb 20Graph Builder is a versatile way to interactively create visualizations to explore and describe your data. Drag and drop data to predefined zones to v...

- © 2025 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us