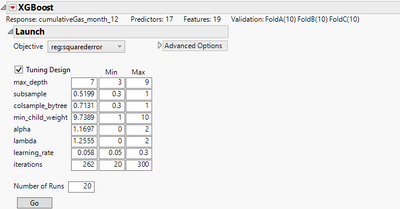

In the XGBoost add-in (https://community.jmp.com/t5/JMP-Add-Ins/XGBoost-Add-In-for-JMP-Pro/ta-p/319383), there is a very nice option where using DoE, a hyperparameter search can be launched, to identify the best combination of hyperparameters to maximize a given objective function.

This option is extremely helpful, and it's a standard approach when training models in R or Python.

I think there would be tremendous value implementing the same option for other predictive modeling platforms, such as Neural, Boosted Tree, Boostrap Forest and Support Vector Machine. In each case, an experimental matrix could be setup to define the search ranges for the selected hyperparameters.

The XGBoost add-in accomplishes this very nicely, so you guys already figured out the mechanics, it's a good time to make it available for other models!