- JMP User Community

- :

- File Exchange

- :

- JMP Add-Ins

- :

- Torch Deep Learning Add-In for JMP Pro

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

- Purpose

- Key Features

- Installation

- System Requirements

- How to Use It

- Examples

- Feedback

- Acknowledgements and Copyright Notices

Purpose

The Torch Deep Learning Add-In for JMP® Pro provides a no-code interface to the popular Torch library for predictive modeling with deep neural networks. The add-in enables you to train and deploy predictive models that use image, text, or tabular features as inputs to predict binary, continuous, or nominal targets.

Key Features

- Access to a wide variety of pretrained models for image and text data which can be fine-tuned or trained from scratch

- Perform image or text classification or regression

- Ability to customize deep networks for tabular data, as well as options to design your own customized convolutional neural nets for both time series and image data

- A large number of advanced options to facilitate model building, assessment, and comparison

- Repeated k-fold cross validation with out-of-fold predictions, plus a separate routine to create optimized k-fold validation columns

- Ability to fit multiple Y responses with different modeling types in one model

- Detailed and interactive graphical and tabular outputs

- Model comparison interface

- Profiling of tabular neural nets, aiding interpretability

- Fast computation on an NVIDIA GPU, if available, on Windows

Installation

You must have an authorized version of JMP Pro 18 or later. Download TorchDeepLearning.jmpaddin from the link in this window on the upper right-hand side. Launch JMP Pro, openTorchDeepLearning.jmpaddin, and click Yes to install. The installed add-in is then located under the Add-Ins top menu (Add-Ins > Torch Deep Learning).

The add-in relies on a large collection of pre-compiled dynamic libraries and pre-trained Torch models which are not included in the add-in after initial installation. Upon the first launch of the main add-in platform (by selecting Add-Ins > Torch Deep Learning > Torch Deep Learning) it will download and unzip an additional series of large files (approximately 4 GB total). The downloads include CUDA-compiled libraries if you are on Windows and the total download and extraction of these files may take several minutes or longer depending on the speed of your web connection. Please be patient and let these download to 100% and unzip fully. All downloaded and extracted files are placed underneath the add-in’s home directory (click View > Add-Ins, select TorchDeepLearning, then click the Home Folder link).

System Requirements

This add-in works with JMP® Pro version 18 only. For smaller data sets, the minimum requirements for JMP® Pro should be sufficient; however, for modeling of medium- to large-size datasets, including those with a large number of images or unstructured texts, it is recommended that you have at least 32 GB of CPU RAM and a fast solid-state hard drive with generous storage (e.g. 1 TB) in order to handle the amount of data and parameters that are typically generated for deep neural net modeling.

If you have a Windows machine with an NVIDA GPU, more details on configuring GPU processing is provided in the Torch Add-in documentation (Addins > Torch Deep Learning > Help). On MacOS, both Intel x86_x64 and Apple Silicon arm64 (M1 M2, and M3) architectures are supported and should be detected automatically.

How to Use It

After installing the add-in in JMP Pro:

- Open a JMP table containing a target variable Y to predict along with image, text, or tabular features to use as inputs.

- Create one or more validation columns by clicking Add-Ins > Torch Deep Learning > Make K-Fold Columns . specifying Y as the Response and Stratification variable (recommended).

- Click Add-Ins > Torch Deep Learning > Torch Deep Learning to launch the platform. Assign variables to roles as in the following example:

The Add-Ins > Torch Deep Learning > Example Data submenu contains several example data sets and a JMP table named Torch_Storybook, which contains 47 examples of different kinds of scenarios in which the add-in can be helpful. It includes rich metadata about each example and links to download JMP data tables with embedded scripts, example output JMP journals, and references.

The Add-Ins > Torch Deep Learning > Download Additional submenu provides a way to download several more image and text models that further expand the capabilities of the add-in.

Click Help from the menu to open detailed documentation on the add-in, including step-by-step examples and guidance for most effective usage.

Examples

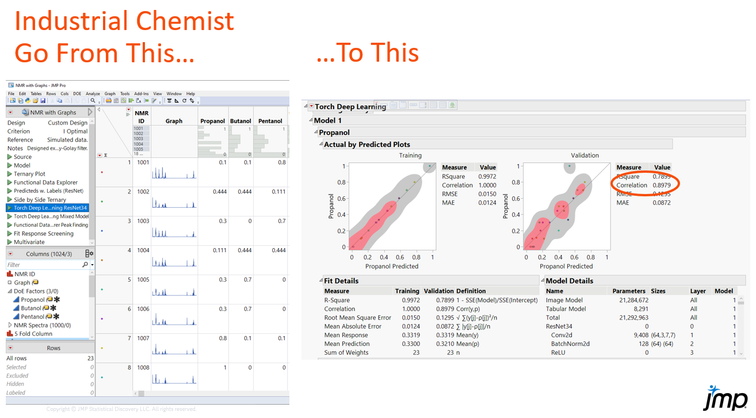

Background: As a chemist you need to predict the composition of unknown samples by comparing against the NMR spectroscopy of a mixture experiment where three components are altered in ratio.

Challenge: The output NMR spectra for mixture experiments are wide, with a small number of experimental runs, but 1000s of columns of unique features. Standard predictive modelling is not accurate and overfits and the process is time consuming to code.

Process: Take individual spectra and turned them into images with Graph Builder, using them to train an image classification model (convolutional neural net) to understand what the spectra look like at different ratios of the 3 components.

Outcome: Can apply unknown samples and accurately identify the composition/ratio of the 3 components.

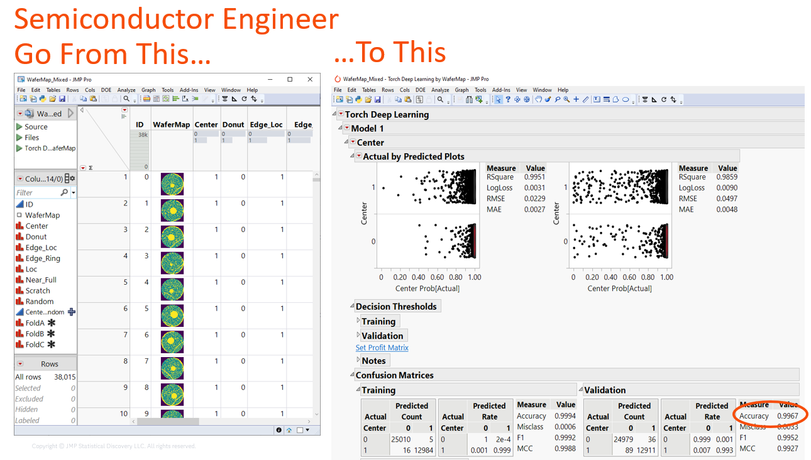

Background: Wafer defects in semiconductor manufacturing can occur across the wafer in different areas. Pictures of the wafers or wafer maps can capture the area of the defects across different batches. Identifying the defect type(s) and the root cause is essential to suggest suitable solutions.

Challenge: You have >30,000 wafer map images, some with numerous defect types that all need to be accurately identified.

Process: Use wafer map images with known wafer defects to train deep convolutional neural nets.

Outcome: Build and deploy a deep learning model to accurately predict the most likely defect classes and probability of defects on new wafer images.

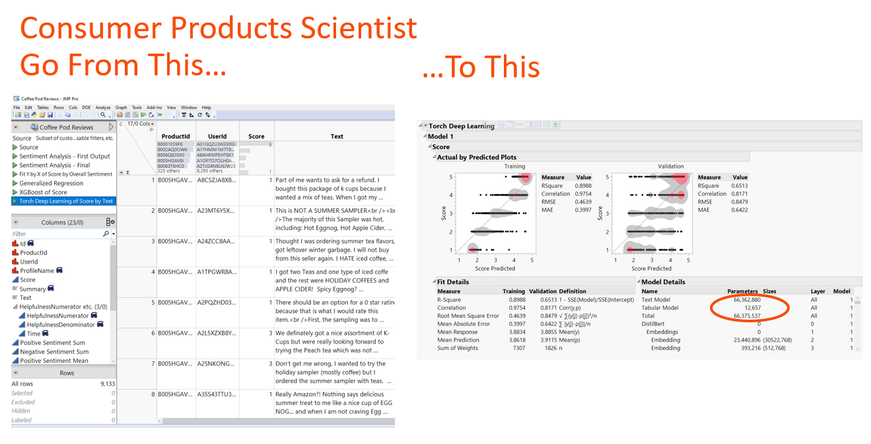

Background: Online product reviews provide a large amount of text data that can be analyzed to understand the customers sentiment towards the product to make key decisions about product improvement, customer support and marketing strategies.

Challenge: Text reviews are unstructured, high volume and difficult to determine the sentiment and identify themes, requiring sophisticated analysis.

Process: Predict buying sentiment using deep language neural nets based on product review scores to identify key outcomes such as likelihood to re-purchase.

Outcome: Identify key sentiment towards product (i.e. likelihood to buy again, product issues) as critical feedback for product decisions.

Video Tutorials

Introduction and Overview

Comprehensive Background and Overview with Examples (requires registration)

Tabular Data and Causal Inference

Image Classification

If you have a particular aspect of the add-in or topic on which you would like to see a video tutorial, please post in the comments.

Feedback

We welcome your feedback and encourage you to post comments here on this page. Note that while add-ins, in general, are not officially supported by our JMP Technical Support team, this add-in was written by and is being actively developed by JMP Life Sciences R&D.

You may also send feedback, problems, questions, and suggestions directly to russ.wolfinger@jmp.com . If reporting a potential bug or crash, please send as much information as possible to reproduce and diagnose it, including data and model setup, screenshots, error windows, log messages, and the operating system and version of JMP Pro you are using.

Acknowledgements and Copyright Notices

We graciously thank the PyTorch team for developing such great capabilities with a well-designed C++ interface. See the Help document for a complete list of individuals and copyright notices as well as these online notices from the PyTorch Foundation.

We also highly appreciate and thank Mark Lalonde and Francis Charette-MIgneault for their excellent contributions to LibTorch:

https://medium.com/crim/contributing-to-libtorch-recent-architectures-and-vanilla-training-pipeline-...

https://github.com/crim-ca/crim-libtorch-extensions

Thanks for this!

Am I reading this right? In the documentation for Loading models into pytorch it says.

In Python, you must recreate the exact same architecture components using PyTorch and then load these files in turn.

So pretty much no matter what we still need someone who knows pytorch and is able to translate what they did in JMP to python. Correct? Like they need to know how to create the datasets, and the nn, and any validation that the JMP User did?

@vince_faller Thanks for your comment. This part of the doc is referring to deployment in Python in which case these things do indeed need to be done one way or another. Deployment in JSL is easier. The following is being added to the doc:

Example Python deployment code is available in Jupyter iPython notebook files in the add-in home folder. Click View > Add-Ins, select TorchDeepLearning, click on the Home Folder link, then navigate into the notebooks subfolder to view this code.

This example Python deployment code is still rough in several places and we are working to refine and streamline it. We’ll take your post as a vote for more automated Python code generation, as is available with XGBoost model fits. Both the Torch add-in and JMP’s native Python integration are brand new for version 18 and we have not yet implemented connections between the two (the Torch add-in is written in C++ with no Python).

Thanks @russ_wolfinger

Yeah I looked at it with no love. I posted a community question at Tony C's request. Hopefully I can get this as it would definitely be a boon to our workflow.

I get an error when trying to install the Torch Deep Learning add-in. I have JMP Pro 18.0.0. The error that shows up in the log is:

Retrying: schannel: next InitializeSecurityContext failed: Unknown error (0x80092013) - The revocation function was unable to check revocation because the revocation server was offline.

Has anyone seen this and is there a solution? Thanks!

Hi @danf This looks like a conflict with security software running on your machine. The add-in calls external dynamic library files for Torch and other related routines saved within the add-in folder. To find this folder, from the JMP main menu click View > Add-Ins, select TorchDeepLearning, then click Home Folder. The dynamic library files are in the subfolder .../lib/18/install/x64-release/bin/ on Windows and ../mac-release/ on Mac. Suggest checking with your IT expert to see how to clear this folder for executables within your security framework.

Additionally, I tried web searching your error message and saw some hits related to git in case that helps.

Thanks Experts!

What is the final result of this deep learning prediction formula?

The key is how do you use the training results to make predictions?

Trouble experts can give further examples in this regard.

Hi @russ_wolfinger, I had feedback from one of the Torch Storybook users that they are getting an Error that they cannot load ImClass Library for the Potato and Diabetes examples. Do you have any suggestions on the best way to troubleshoot this?

Thanks,

Thanks for reporting @Peter_Hersh , The "cannot load the ImClass Library" error in the JMP log is nearly always a sign that the install did not work correctly. The easiest remedy is to reinstall the add-in then click Add-Ins > Torch Deep Learning > Torch Deep Learning at a time when the internet connection is fast. A progress window should appear showing 4-7 zip files and they should all reach 100% download and unzip successfully. Then the add-in should be good to go. If this still does not work, please have them contact me at russ.wolfinger@jmp.com and we can troubleshoot further--we have a few alternate ways to do the install if needed.

Thanks Russ. That reinstall worked.

I heard that one of the Torch add-in packages comes with a script to reduce image size, but I don't see it in the package I downloaded for Mac. Is there a jsl script floating around out there that may do this? I ask because I hit a memory wall with about 750 rows, each of which containing a photo that was originally ~3 mb (the entire dataset size is 6 GB), even on my 64-GB Macbook Pro, so I'm trying to see if reducing the image size somewhat could allow me to analyze more training data (albeit at the expense of resolution, which might lead to a loss of accuracy).

Thanks @abmayfield . The current add-in includes a submenu "Image Utilities" with three options: Check Image Size, Resize Images, and Crop Images. Suggest running the first two and possibly the third if it makes sense, although the current cropping functionality is limited to be the same for every image.

Suggest using size 224 x 224 for resizing initially as this tends to provide a reasonable tradeoff between resolution, performance, and speed for most all of the image models. Note the resizing happens in place in the JMP image column, so after resizing it's a good idea to save the table as a different name in order not to lose the original large images.

Resizing can dramatically improve speed so hopefully this works well for you. You have some incredible pictures and looking forward to seeing where this leads!

Thanks so much! I'm going to try this right now.

Dumb question on the resizing, which seems to work, but I worry that I may have down-sampled TOO much by using 224. Do the value correspond to pixels? In other words, would using "1,500" lead to MORE information being maintained or less? Most of my images are around 4,000 x 4,000 pixels.

@abmayfield 224 is the number of pixels on both x and y, and higher values mean more pixels, higher resolution, but slower training speeds. While 224 x 224 is a common size that works well across a wide range of problems, each application is different. Your coral reef pictures have some spectacular detail and it may in fact improve model performance to use higher resolutions (more pixels).

Recommend trying several different Image Models with Image Size set to 224 at first to assess out-of-fold performance. Then with your best model from this, try resizing the original images to 448 x 448 from the original ones to see how much of a difference it makes, and work your way up from there as desired.

There is a natural tradeoff here with computational performance. With larger pictures run times can take hours or even overnight on a Mac. Additional strategies are to use cropped images or move to a Windows machine with a decent NVIDIA GPU.

please share zip file via google drive or terabox

Hello @Raaed , If you would please contact me at russ.wolfinger@jmp.com. What is happening when downloading the zip files when you first launch the add-in? The most recent version breaks them into smaller files and may work better if you have not tried it. Also, are you on Windows with NVIDIA GPU, Windows CPU, or Mac?

Hi @russ_wolfinger , downloading is not complete, continue to 90% then back from the start

thanks for your help

Is there a way to save the model "formulas" in such a way that I could insert a new image as a new row in the JMP Data table and have the predicted columns populate? Or does this need to be done with scripting or python coding?

Hi @DrewLuebe ,

The Torch models do not use JMP formulas due to their large size and complexity. Instead, they are serialized and saved as binary PyTorch files on disk with suffix ".pt".

The first thing to do is save the model with a unique name using the model red triangle > Save Model. This assigns the name you specify to a folder on disk that contains all of the saved model files (2-3 files per validation fold).

For new images in a JMP table (either appended to training data or in a new table), use the "Restore From" Advanced Option, set Epochs = 0, Go, then Save Predicteds to score the new rows. If it is a new JMP table, it must also contain the same Y and validation columns with the same levels as the original training data. This approach can be coded with JSL scripting if desired.

Alternatively, deploy the models using Python after clicking model red triangle > Generate Python Code. See the Help doc pages 27-28 for more details and let us know if you have further questions posted here or in the new JMP Marketplace .

Thank you!

Recommended Articles

- © 2025 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us