In the paper titled "Confidence curves: an alternative to null hypothesis significance testing for the comparison of clas...", Daniel Berrar details issues related to the use of null hypothesis significance testing for the comparison of classifiers and proposes the use of confidence curves as an alternative. After installing the add-in, you can generate confidence curves for comparing various classifiers with a reference classifier in the following manner:

- In JMP Pro's menu bar, select "Analyze => Predictive Modeling => Modeling Screening".

- Select appropriate parameters for the "Y, Response" and "X, Factor" fields.

- In the "Folded Crossvalidation" section of the dialog, enable the "K Fold Crossvalidation" option and set the number of folds in the "K" input box, and the number of repeats in the "Repeated K Fold" input box.

- Select "OK" to run the Model Screening platform.

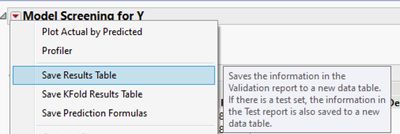

- When the results dialog is displayed, click the red triangle menu.

- Select "Save Results Table"

- In JMP Pro's menu bar, select "Add-Ins => Confidence Curves".

- Follow the displayed instructions to choose the results table generated in Step 6, the reference model, and the metric to be used for comparison.

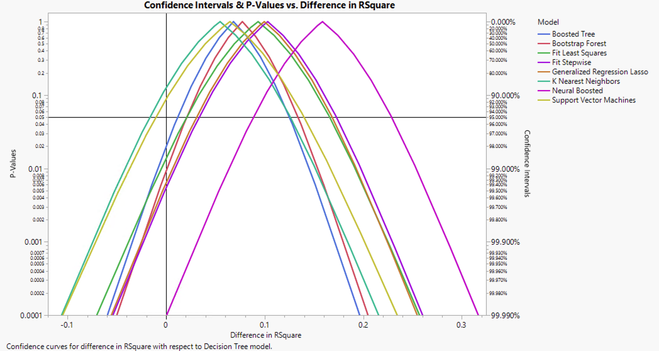

- Afterwards, a Graph Builder report should be generated similar to that of the one shown below: