- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Statstical Performance of XGBoost in Model screening Test set

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Statstical Performance of XGBoost in Model screening Test set

Hello,

I am using Model screening and Nested cross validation to compare several ML models for the classification of a disease. XGBoost is performing the best across Test folds for ROC- AUC and Misclassification rate. But when I want to look in more detail to other statistics by performing Decision Threshold analysis I get all performances (F1-scores, MCC, Sens, Spec? etc..) for all other models but not for XGBoost in the Test set. Why is that? I want to report these statistics as well. Any tip how I can get these statistics for the test folds in XGBoost model as well?

regards,

Lu

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Statstical Performance of XGBoost in Model screening Test set

Thanks for the excellent response @Victor_G

@Lu , wanted to mention that for Model Screening, we fixed a leakiness problem in Version 19--available now via Early Adopter and due to ship production in a few weeks. Suggest rerunning with 19 once you get it. I believe XGBoost Test results should be present, and as @Victor_G astutely indicates, always use the Test set split for comparing models.

Note XGBoost and Torch are different from other JMP platforms in that they only use two-way splits (Training and Validation), but they do not peek at the validation split during training and so validation results are leak-free. Their setup is designed for full K-Fold Cross Validation and saving out-of-fold predictions instead of doing a single three-way split.

A couple additional suggestions:

1. Try the Autotune option in XGBoost, using the standalone setup as described by @Victor_G . (There is also a utility Make K-Fold Columns that comes with the add-in.) You can likely achieve even better out-of-fold performance.

2. Torch Deep Learning neural nets are also definitely worth a try for comparison, and for absolute top performance, ensemble them with XGBoost.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Statstical Performance of XGBoost in Model screening Test set

Hi @Lu,

Just for clarification, decision thresholds adjustments should be done on the validation set, not on the test set.

Here are some reminders about the terms used and their use :

- Training set : Used for the actual training of the model(s),

- Validation set : Used for model optimization (hyperparameter fine-tuning, features/threshold selection, ... for example) and model selection,

- Test set : Used for generalization and predictive performance assessment of the selected model on new/unseen data.

Validation set purpose is to help compare and select a model, as well as fine-tuning hyperparameters or decision thresholds.

The test set should not be used in any way during model training and fine-tuning, as it serves as a final unbiased "validation" of your model and final assessment of your model's performances.

You can read more about the distinction between validation and test sets in the following conversations :

If we are seeing "Training" and "Validation" results in the output even when we ... CROSS VALIDATION - VALIDATION COLUMN METHOD

...

On your specific question, if I'm not mistaking, XGBoost is only available for JMP Pro version through an add-in created by @russ_wolfinger, so since it's a functionality added through an add-in, all model tuning options may not be available (like Decision threshold). You can create a Wish on JMP wish list or write a comment about this situation on the page dedicated to XGBoost add-in.

However, if XGBoost is the top performer model, you could :

- Separate your test set from your training/validation data first ! This way, test data is not seen by the model at any step, and you only use test data once your model has fixed hyperparameters and decision threshold.

- Create a K-folds validation column on your training/validation dataset.

- Launch the XGBoost platform (from add-in menu), specify your K-folds column validation :

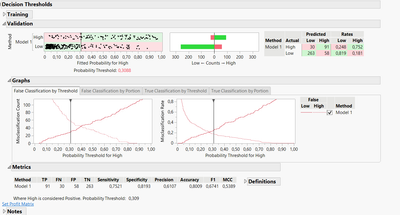

- Run the XGBoost training, and adjust the decision threshold on validation set:

- Save Prediction Formula and assess performances on test set.

Hope this answer will help you,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Statstical Performance of XGBoost in Model screening Test set

Thanks for the excellent response @Victor_G

@Lu , wanted to mention that for Model Screening, we fixed a leakiness problem in Version 19--available now via Early Adopter and due to ship production in a few weeks. Suggest rerunning with 19 once you get it. I believe XGBoost Test results should be present, and as @Victor_G astutely indicates, always use the Test set split for comparing models.

Note XGBoost and Torch are different from other JMP platforms in that they only use two-way splits (Training and Validation), but they do not peek at the validation split during training and so validation results are leak-free. Their setup is designed for full K-Fold Cross Validation and saving out-of-fold predictions instead of doing a single three-way split.

A couple additional suggestions:

1. Try the Autotune option in XGBoost, using the standalone setup as described by @Victor_G . (There is also a utility Make K-Fold Columns that comes with the add-in.) You can likely achieve even better out-of-fold performance.

2. Torch Deep Learning neural nets are also definitely worth a try for comparison, and for absolute top performance, ensemble them with XGBoost.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Statstical Performance of XGBoost in Model screening Test set

Thanks for the excellent response Victor and Ross. Already looking forward to see "what's new" the output of the Model screening platform in version 19 will bring in the near future !

Regards,

Ludo

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us