- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

Discussions

Solve problems, and share tips and tricks with other JMP users.- JMP User Community

- :

- Discussions

- :

- Re: How to modify hyperparameters in Model Screening platform

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

How to modify hyperparameters in Model Screening platform

Hi JMP experts,

I find the Model Screening platform is very useful. I've figured out the hyperparameters through the Tuning Design Table, so I'm wondering if there's a way to modify hyperparameters in Model Screening platform, such as JSL.

If the platform can't modify the hyperparameters, can I use the default parameters in the article.

Looking forward to your reply

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: How to modify hyperparameters in Model Screening platform

Hi @Wang123,

Welcome in the Community !

Have you read the documentation about Model Screening ? To answer your question directly, here is the answer in the JMP Help : "The modeling platforms use default options and tuning parameters in model fitting. You can try to improve the fit past what the default yields by calling platforms directly and choosing different options."

So by default, you can't tune hyperparameters in Model Screening platform (perhaps by JSL), and it's not the use of this platform. Model Screening is AutoML, where the aim is to fit quickly a high number of very different types of Machine Learning models, to better understand which type(s) of models does seem to correctly fit the data. Once this exploration phase is done, you can use the corresponding model's platforms to tune the hyperparameters with tuning design tables and possibly improve further your model's performances.

Note that Machine Learning algorithms have various sensitivity/robustness regarding hyperparameters configurations : for example, Random Forests are one of the most robust ML algorithm, where hyperparameters have little influence on its performances. See [1802.09596] Tunability: Importance of Hyperparameters of Machine Learning Algorithms (arxiv.org)

You may technically be able to do what you intend to do, but several warnings to take care :

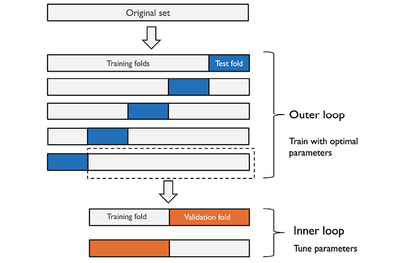

- You may have to use nested cross-validation (if already using cross-validation) or cross-validation on your training set (and using the validation set to compare the models' performances) if you want to fit several models and tune them. The added data splitting is here to make sure that you're not overfitting with the hyperparameters optimization, and that a part of your data can still be used to do algorithms comparison and selection in a fair and "as unbiased as possible" way.

- A possible problem with this Model Screening platform is that the crossvalidation option is by default random. Depending on your dataset and the distributions of your features/inputs, this may not be a sensible choice and would not provide a fair assessment and representativity of your dataset. A workaround could be to create a stratified formula (K-folds) validation column and use it in the validation panel of the model screening : Launch the Make Validation Column Platform (jmp.com)

- If you really want to try and tune several models with this platform or any AutoML platform, think about the number of models that need to be fitted and the time and computations needed : you'll need to fit each selected type of model KxK' times (nested cross-validation with K = number of outer folds for model validation, K' = number of inner folds for hyperparameters tuning). Depending on your dataset, its dimensionality and complexity, this "brute-force" approach may not be reasonable and achievable.

More ressources about nested cross-validation :

https://inria.github.io/scikit-learn-mooc/python_scripts/cross_validation_nested.html

https://scikit-learn.org/stable/auto_examples/model_selection/plot_nested_cross_validation_iris.html

My advice would be to proceed sequentially and use the platform for what it is best at, comparing very diverse algorithms and select the most promising ones. Then, you can optimize the hyperparameters on the (few) models that seem to be best performing in order to improve a little bit the performances. Be careful in this process to avoid data leakage, so never use your test set until a final model is chosen and optimized for example (or you can expect optimistic results on your test set and bad surprises when the algorithm will be used on new data).

This process makes more sense to me than using a brute-force approach, as the hyperparameters optimization won't "magically" make an algorithm learn much better than a more suitable algorithm/method.

I hope this answer (even if not directly what you would expect) will help you,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: How to modify hyperparameters in Model Screening platform

Hi @Wang123,

Glad to hear that my response was helpful.

To successfully use Machine Learning, you need to set up a strategy and framework for your Machine Learning project before starting any analysis. Most of the time, Machine Learning projects may fail because the starting point (data collection considerations) or the end phase (testing conditions and monitoring) are not well considered and defined:

- Starting point: Think about how you collected your data : How representative is your dataset for the task you want to do ? In which context the data has been collected: in observational studies, or in controlled experimentations like DoE ? Is the data sampling and features/variables distributions similar to what will happen in the model's environment (for example variables ranges/levels, imbalance or balance between classes or values, response(s) distributions, similar measurement equipment performances, uncertainty in the measurements, ...) ?

These questions are just some examples of what you need to consider and define when using data for Machine Learning and can help manage your expectations. - End phase: You need to know how you will test your final model. Can you already split your dataset and keep a small part for testing purposes, or are you planning to acquire new data to test and monitor your model's performances ?

Here are some other recommendations and guidance related to your questions, as well as additional comments :

- To avoid any misunderstanding, it's important to know what are the use and needs between each sets :

- Training set : Used for the actual training of the model(s),

- Validation set : Used for model optimization (hyperparameter fine-tuning, features/threshold selection, ... for example) and model selection,

- Test set : Used for generalization and predictive performance assessment of the selected model on new/unseen data. At this stage you should not have several models in competition.

- In the case of small dataset, there are several option to realize models' validation (models comparison and selection):

- K-folds crossvalidation : Split the dataset in K folds. The model is trained K-times, and each fold is used K-1 times for training, and 1 time for validation. It enables to assess model robustness, as performances should be equivalent across all folds.

- Leave-One-Out crossvalidation : Extreme case of the K-fold crossvalidation, where K = N (number of observations). It is used when you have small dataset, and want to assess if your model is robust. This technique may be sensitive to highly influential/outliers points.

- Autovalidation/Self Validating Ensemble Model : Instead of separating some observations in different sets, you associate each observation with a weight for training and validation (a bigger weight in training induce a lower weight in validation, meaning that this observation will be used mainly for training and less for validation), and then repeat this procedure by varying the weight. It is used for very small dataset, and/or dataset where you can't independently split some observations between different sets : for example in Design of Experiments, the set of experiments to do can be based on a model, and if so, you can't split independantly some runs between training and validation, as it will bias the model in a negative way; the runs needed for estimating parameters won't be available, hence reducing dramatically the performance of the model.

All these approaches are supported by JMP : Launch the Make Validation Column Platform (jmp.com)

You can use (stratified) K-Folds crossvalidation in individual model platforms by creating your validation column formula first (choose "Make K-Folds Validation column" in the launch window) and then use this validation column in any model's launch window as "Validation" parameter. - Depending on your dataset size and the conditions in which data has been collected, you may choose one of the options. In any case for a small dataset, using stratification on input variables is highly recommended, to avoid having dissimilar folds with very different model's performances. Check also for group variables (for example if you have replicates, make sure the replicates are all on the same fold/set to avoid data leakage). For DoE data or very small datasets, you may not be able to separate data into different folds as it may create very different sets during training and validation, so SVEM can be interesting. You may find also complementary discussions and answers on this post :CROSS VALIDATION - VALIDATION COLUMN METHOD

- If you're dealing with small datasets, choose simple algorithms. Generally, the higher the number of parameters in the model, the bigger your training dataset need to be, as you'll need more data to estimate precisely each of the parameters in the model. I would rather choose robust algorithms when dealing with small datasets, such as Random Forests, Support Vector Machines, and Generalized Regression platforms. Do take advantage of some "safeguards options" in these algorithms, such as Regularization/Penalization for Generalized regression (Estimation Method Options (jmp.com)) or "Early Stopping" present both in GenReg and tree-based methods (Boosted Tree and Bootstrap Forest) to avoid overfitting as much as possible.

Some LinkedIn articles I created about it for more explanations :

Both of these algorithms have either few hyperparameters to tune, performances robustness to hyperparameters tuning (see: https://arxiv.org/abs/1802.09596 mentioned above) or have training specificities that help them avoid overfitting and reduce prediction variance (the bootstrap samples part in Random Forest for example).

I would not use boosted tree or boosted algorithms for small datasets, as their focus is on reducing bias (systematic error) as much as possible by sequentially correcting previous errors. This make them very effective prediction algorithms, but they are highly sensitive and influenced by the training set, with possible poor generalization performances and high variance.

As a remark on cross-validation and the way the results are displayed, there may be several warnings :

- The results will be displayed for each folds. Don't run a model trained and validated on specific fold, that would be considered "cheating", as you're selecting the best data splitting to improve model performance, which is biasing the overall performance and generalization performance you expect to have on new data/test set.

- K-folds crossvalidation has a misleading name, as it's not properly a validation method, but more a "robustness test" to see how a model behave with different data partitionings. See : MFML 065 - Understanding k-fold cross-validation (youtube.com)

So the important information in this step is not the individual performances of the model on each folds, but how regular and similar are the performances (average performance and standard deviation performance on all folds). If you see discrepancy/high variance in the result, that may indicate the model may not be suitable for your task and/or that your data has singular individual points (to be checked) confusing the model and preventing it to generalize/find patterns. - Finally, once cross-validation done, there is no consensus on what to do with the results. Some retrain the selected model on the whole dataset, some are ensembling the individual models trained on each folds, or stacking the predictions ... Here is a link about this deployment phase How to get from evaluation to final model (substack.com)

Choose the option that makes more sense to you.

More generally, there are two ways to deal with ML algorithms :

- Either your dataset size, constraints and many other selection criteria reduce the number of models and possibilities, and you just try few models to select the most promising one. Here is a recording of a presentation I gave this year, with a Space-Filling mixture DoE and the use of Machine Learning : Synergy Between Design of Experiments and Machine Learning for Enhanced Domain E... - JMP User Commu... If you watch the "model choice" part, you may have some ideas about selection criteria for your own project.

- You have no idea about which model may perform better, and you would like to have a first exploratory modelling test/competition between algorithms. In this case, you can try to use a subset of your data (60-80%) to train the models and compare them or if your dataset is very small, use it entirely with cross-validation to do this first exploratory part through Model Screening platform. Once you identify promising algorithms, try to use individual models platforms with a clear validation strategy (Stratified K-folds crossvalidation with fixed seed for reproducibility for example), compare them, select the most promising one and test it on your test set/new data.

Sorry for the length, I hope there will be something for you in this big answer ! :):):)

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: How to modify hyperparameters in Model Screening platform

Hi @Wang123,

Welcome in the Community !

Have you read the documentation about Model Screening ? To answer your question directly, here is the answer in the JMP Help : "The modeling platforms use default options and tuning parameters in model fitting. You can try to improve the fit past what the default yields by calling platforms directly and choosing different options."

So by default, you can't tune hyperparameters in Model Screening platform (perhaps by JSL), and it's not the use of this platform. Model Screening is AutoML, where the aim is to fit quickly a high number of very different types of Machine Learning models, to better understand which type(s) of models does seem to correctly fit the data. Once this exploration phase is done, you can use the corresponding model's platforms to tune the hyperparameters with tuning design tables and possibly improve further your model's performances.

Note that Machine Learning algorithms have various sensitivity/robustness regarding hyperparameters configurations : for example, Random Forests are one of the most robust ML algorithm, where hyperparameters have little influence on its performances. See [1802.09596] Tunability: Importance of Hyperparameters of Machine Learning Algorithms (arxiv.org)

You may technically be able to do what you intend to do, but several warnings to take care :

- You may have to use nested cross-validation (if already using cross-validation) or cross-validation on your training set (and using the validation set to compare the models' performances) if you want to fit several models and tune them. The added data splitting is here to make sure that you're not overfitting with the hyperparameters optimization, and that a part of your data can still be used to do algorithms comparison and selection in a fair and "as unbiased as possible" way.

- A possible problem with this Model Screening platform is that the crossvalidation option is by default random. Depending on your dataset and the distributions of your features/inputs, this may not be a sensible choice and would not provide a fair assessment and representativity of your dataset. A workaround could be to create a stratified formula (K-folds) validation column and use it in the validation panel of the model screening : Launch the Make Validation Column Platform (jmp.com)

- If you really want to try and tune several models with this platform or any AutoML platform, think about the number of models that need to be fitted and the time and computations needed : you'll need to fit each selected type of model KxK' times (nested cross-validation with K = number of outer folds for model validation, K' = number of inner folds for hyperparameters tuning). Depending on your dataset, its dimensionality and complexity, this "brute-force" approach may not be reasonable and achievable.

More ressources about nested cross-validation :

https://inria.github.io/scikit-learn-mooc/python_scripts/cross_validation_nested.html

https://scikit-learn.org/stable/auto_examples/model_selection/plot_nested_cross_validation_iris.html

My advice would be to proceed sequentially and use the platform for what it is best at, comparing very diverse algorithms and select the most promising ones. Then, you can optimize the hyperparameters on the (few) models that seem to be best performing in order to improve a little bit the performances. Be careful in this process to avoid data leakage, so never use your test set until a final model is chosen and optimized for example (or you can expect optimistic results on your test set and bad surprises when the algorithm will be used on new data).

This process makes more sense to me than using a brute-force approach, as the hyperparameters optimization won't "magically" make an algorithm learn much better than a more suitable algorithm/method.

I hope this answer (even if not directly what you would expect) will help you,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: How to modify hyperparameters in Model Screening platform

Hi Victor_G,

Thank you for your reply that is very inspiring to me. I can also try this approach, first with the Model Screening platform and then the appropriate algorithm.

In order to avoid the overfitting, K-fold crossvalidation is a good way for small data. However, some algorithm platforms (not Model Screening) do not support K-fold cross-validation, such as Bootstrap Forest, Boosted Tree, and Baive Bayes. Of course, stratified formula (K-folds) validation column doesn't fit in. Thus, after selecting the most promising algorithm in Model Screening platform and hyperparameters optimization, can you share some experience about how to train a model better when it comes to small data?

Lastly, after clicking the button "Run Selected", I found that every algorithm in Model Screening platform with K-fold crossvalidation can automatically generate a Validation Fold. So that, can I use this Validation Fold in the same algorithm with the best hyperparameter to avoid the overfitting?

Looking forward to hearing from you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: How to modify hyperparameters in Model Screening platform

Hi @Wang123,

Glad to hear that my response was helpful.

To successfully use Machine Learning, you need to set up a strategy and framework for your Machine Learning project before starting any analysis. Most of the time, Machine Learning projects may fail because the starting point (data collection considerations) or the end phase (testing conditions and monitoring) are not well considered and defined:

- Starting point: Think about how you collected your data : How representative is your dataset for the task you want to do ? In which context the data has been collected: in observational studies, or in controlled experimentations like DoE ? Is the data sampling and features/variables distributions similar to what will happen in the model's environment (for example variables ranges/levels, imbalance or balance between classes or values, response(s) distributions, similar measurement equipment performances, uncertainty in the measurements, ...) ?

These questions are just some examples of what you need to consider and define when using data for Machine Learning and can help manage your expectations. - End phase: You need to know how you will test your final model. Can you already split your dataset and keep a small part for testing purposes, or are you planning to acquire new data to test and monitor your model's performances ?

Here are some other recommendations and guidance related to your questions, as well as additional comments :

- To avoid any misunderstanding, it's important to know what are the use and needs between each sets :

- Training set : Used for the actual training of the model(s),

- Validation set : Used for model optimization (hyperparameter fine-tuning, features/threshold selection, ... for example) and model selection,

- Test set : Used for generalization and predictive performance assessment of the selected model on new/unseen data. At this stage you should not have several models in competition.

- In the case of small dataset, there are several option to realize models' validation (models comparison and selection):

- K-folds crossvalidation : Split the dataset in K folds. The model is trained K-times, and each fold is used K-1 times for training, and 1 time for validation. It enables to assess model robustness, as performances should be equivalent across all folds.

- Leave-One-Out crossvalidation : Extreme case of the K-fold crossvalidation, where K = N (number of observations). It is used when you have small dataset, and want to assess if your model is robust. This technique may be sensitive to highly influential/outliers points.

- Autovalidation/Self Validating Ensemble Model : Instead of separating some observations in different sets, you associate each observation with a weight for training and validation (a bigger weight in training induce a lower weight in validation, meaning that this observation will be used mainly for training and less for validation), and then repeat this procedure by varying the weight. It is used for very small dataset, and/or dataset where you can't independently split some observations between different sets : for example in Design of Experiments, the set of experiments to do can be based on a model, and if so, you can't split independantly some runs between training and validation, as it will bias the model in a negative way; the runs needed for estimating parameters won't be available, hence reducing dramatically the performance of the model.

All these approaches are supported by JMP : Launch the Make Validation Column Platform (jmp.com)

You can use (stratified) K-Folds crossvalidation in individual model platforms by creating your validation column formula first (choose "Make K-Folds Validation column" in the launch window) and then use this validation column in any model's launch window as "Validation" parameter. - Depending on your dataset size and the conditions in which data has been collected, you may choose one of the options. In any case for a small dataset, using stratification on input variables is highly recommended, to avoid having dissimilar folds with very different model's performances. Check also for group variables (for example if you have replicates, make sure the replicates are all on the same fold/set to avoid data leakage). For DoE data or very small datasets, you may not be able to separate data into different folds as it may create very different sets during training and validation, so SVEM can be interesting. You may find also complementary discussions and answers on this post :CROSS VALIDATION - VALIDATION COLUMN METHOD

- If you're dealing with small datasets, choose simple algorithms. Generally, the higher the number of parameters in the model, the bigger your training dataset need to be, as you'll need more data to estimate precisely each of the parameters in the model. I would rather choose robust algorithms when dealing with small datasets, such as Random Forests, Support Vector Machines, and Generalized Regression platforms. Do take advantage of some "safeguards options" in these algorithms, such as Regularization/Penalization for Generalized regression (Estimation Method Options (jmp.com)) or "Early Stopping" present both in GenReg and tree-based methods (Boosted Tree and Bootstrap Forest) to avoid overfitting as much as possible.

Some LinkedIn articles I created about it for more explanations :

Both of these algorithms have either few hyperparameters to tune, performances robustness to hyperparameters tuning (see: https://arxiv.org/abs/1802.09596 mentioned above) or have training specificities that help them avoid overfitting and reduce prediction variance (the bootstrap samples part in Random Forest for example).

I would not use boosted tree or boosted algorithms for small datasets, as their focus is on reducing bias (systematic error) as much as possible by sequentially correcting previous errors. This make them very effective prediction algorithms, but they are highly sensitive and influenced by the training set, with possible poor generalization performances and high variance.

As a remark on cross-validation and the way the results are displayed, there may be several warnings :

- The results will be displayed for each folds. Don't run a model trained and validated on specific fold, that would be considered "cheating", as you're selecting the best data splitting to improve model performance, which is biasing the overall performance and generalization performance you expect to have on new data/test set.

- K-folds crossvalidation has a misleading name, as it's not properly a validation method, but more a "robustness test" to see how a model behave with different data partitionings. See : MFML 065 - Understanding k-fold cross-validation (youtube.com)

So the important information in this step is not the individual performances of the model on each folds, but how regular and similar are the performances (average performance and standard deviation performance on all folds). If you see discrepancy/high variance in the result, that may indicate the model may not be suitable for your task and/or that your data has singular individual points (to be checked) confusing the model and preventing it to generalize/find patterns. - Finally, once cross-validation done, there is no consensus on what to do with the results. Some retrain the selected model on the whole dataset, some are ensembling the individual models trained on each folds, or stacking the predictions ... Here is a link about this deployment phase How to get from evaluation to final model (substack.com)

Choose the option that makes more sense to you.

More generally, there are two ways to deal with ML algorithms :

- Either your dataset size, constraints and many other selection criteria reduce the number of models and possibilities, and you just try few models to select the most promising one. Here is a recording of a presentation I gave this year, with a Space-Filling mixture DoE and the use of Machine Learning : Synergy Between Design of Experiments and Machine Learning for Enhanced Domain E... - JMP User Commu... If you watch the "model choice" part, you may have some ideas about selection criteria for your own project.

- You have no idea about which model may perform better, and you would like to have a first exploratory modelling test/competition between algorithms. In this case, you can try to use a subset of your data (60-80%) to train the models and compare them or if your dataset is very small, use it entirely with cross-validation to do this first exploratory part through Model Screening platform. Once you identify promising algorithms, try to use individual models platforms with a clear validation strategy (Stratified K-folds crossvalidation with fixed seed for reproducibility for example), compare them, select the most promising one and test it on your test set/new data.

Sorry for the length, I hope there will be something for you in this big answer ! :):):)

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: How to modify hyperparameters in Model Screening platform

Hi Victor_G,

Your reply is so meaningful. I know how to make K-Folds validation column. As far as I know, some algorithm platforms do not support K-fold cross-validation, such as Bootstrap Forest, Boosted Tree, and Baive Bayes. This is available in JMP Help at https://www.jmp.com/support/help/en/18.0/index.shtml#page/jmp/kfold-and-holdback-validation.shtml. In this case, I have to split our small data into a training, validation and test set. Is there a way to use the K-folds crossvalidation in these algorithm platforms (Bootstrap Forest, Boosted Tree, and Baive Bayes)?

As you said "The results will be displayed for each folds. Don't run a model trained and validated on specific fold, that would be considered cheating...", and another said "Finally, once cross-validation done, there is no consensus on what to do with the results. Some retrain the selected model on the whole dataset, some are ensembling the individual models trained on each folds". I don't know which way is more appropriate, training in specific fold or whole training set?

Looking forward to hearing from you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: How to modify hyperparameters in Model Screening platform

Hi @Wang123,

Sorry, you're right about the restricted use of K-folds crossvalidation column in some modeling platforms.

One possible workaround is to create a common validation column formula (stratified sampling with 80% training and 20% validation), use it in the model platform, and use the function "Simulate" to generate many crossvalidation samples and results to assess robustness. I have described the methodology in another post : Solved: Re: How can I automate and summarize many repeat validations into one output tab... - JMP Us...

Concerning your second question, my personal opinion would be to stack predictions from individual models, so that each individual model has the same contribution to the final prediction. This seems to be the most neutral approach, and would enable bias and (mostly) variance reduction ("Wisdom of the crowd" approach), as well as overfitting prevention. It could also be a good opportunity to estimate uncertainty prediction intervals thanks to individual models contributions.

Ensembling would be too complex for small dataset, as the combination of the individual models would need an extra validation to make sure your final ensemble model is not overfitting and able to generalize.

Depending on your close knowledge about ML algorithms, the option to use the whole dataset and train the final model on it could also be used, and comparing the outcomes of the cross-validated model with the "non-validated" model to spot any sign of overfitting.

Again, the use of simple models and regularization/restriction techniques greatly help prevent overfitting.

Hope this answer will help you,

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: How to modify hyperparameters in Model Screening platform

Hi Victor_G,

Thanks for your help. Either Model Screening platform with hyperparameters tuning function or all individual model platforms with K-fold function can easily solve my problem. It is a pity that the two platforms are lacking some functions.

After some thought, I'd also like to ask if the following steps are appropriate:

(1) stratified sampling with 80% training and 20% test set.

(2) In training set (80%), I trained multiple machine learning algorithms with K-fold cross-validation in Model Screening platform. Of course, this step uses the default hyperparameters. After comparing, I found part of the algorithm to be optimal, such as Bootstrap Forest.

(3) Then, I clarified the optimal hyperparameters of Bootstrap Forest through the Tuning Design Table.

(4) In training set (80%), I re-fitted the Bootstrap Forest algorithm with optimal hyperparameters. Of course, I can also fit other algorithms.

(5) In test set (20%), I further assessed model performance of Bootstrap Forest and other algorithms.

Are the above steps appropriate in JMP?

In step 2, can I just report the results of model comparisons (results of K-fold cross-validation) with default hyperparameters in the article? Of course, we know that many articles start with step 3 and then step 2, so they report the results of model comparisons with optimal hyperparameters.

In step 4, I didn't set up any cross-validation method, is this correct? Or is there another way?

Looking forward to hearing from you.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: How to modify hyperparameters in Model Screening platform

Hi @Wang123,

I find steps 1) and 2) appropriate.

Steps 3) and 4) could be combined, to find optimal hyperparameters of severals models, evaluate, compare them and select a final model. You also need a validation strategy for this step, as optimizing hyperparameters without any validation would lead to overfitting. Depending on the results of this hyperparameters optimization on the (K-folds?) validation set(s), you select an algorithm which seems to be the most appropriate.

Depending on your validation strategy, you either keep the algorithm trained as it is (if you have used a validation set to compare and select a model), or you can do models stacking if you have used K-folds crossvalidation (to get the average prediction from the individual models).

In step 5, you assess the performance of your final model on new and unseen data. There should be only 1 algorithm/model at this stage. Validation is used to compare and select a model, not the test phase.

Concerning your questions:

"In step 2, can I just report the results of model comparisons (results of K-fold cross-validation) with default hyperparameters in the article?" : It depends what you want to show/demonstrate. If you want to explain why you have chosen some specific models, you could use the results of step 2. It makes more sense to compare results from step 2 and 3 on the selected algorithms if you want to highlight the importance and benefit of hyperparameters tuning (which is not always important for some robust models like Random Forests).

"In step 4, I didn't set up any cross-validation method, is this correct? Or is there another way?" I wouldn't recommend refitting on the entire training set, unless you use robust algorithms and have metrics to ensure you're not overfitting. Random Forests are typically good examples of robust algorithms and have internal validation (for continuous response) through Out-Of-Bag samples : https://www.jmp.com/support/help/en/17.2/#page/jmp/overview-of-the-bootstrap-forest-platform.shtml#

Comparing metrics of the In-Bag and Out-Of-Bag samples can help you assess if your Random Forest may have overfitting.

If you're using a different algorithm and/or not sure about its robustness, just keep your optimized (and validated !) model from previous step (or the stacked models if you were using K-folds crossvalidation).

Hope this last answer will help you,

PS: Don't forget to "Accept as Solution" the answer(s) if they were helpful to you, it's a good way for other JMP Users to quickly get to the solution without reading everything.

"It is not unusual for a well-designed experiment to analyze itself" (Box, Hunter and Hunter)

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: How to modify hyperparameters in Model Screening platform

Hi Victor_G,

Thanks for your precious perspective! These are very helpful to me.

Recommended Articles

- © 2026 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- Contact Us