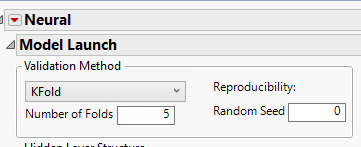

Right now I can do K-fold crossvalidation when building a neural network:

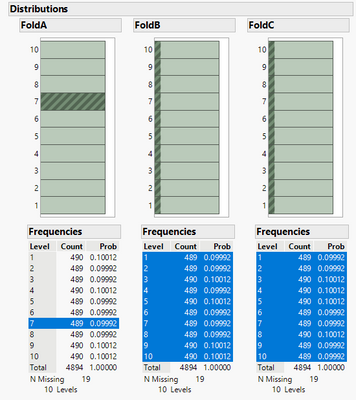

And with the recent XGBoost add-in (https://community.jmp.com/t5/JMP-Add-Ins/XGBoost-Add-In-for-JMP-Pro/ta-p/319383), it's even better as multiple folds can be added, which are orthogonal to each other:

However, this option is not available for other platforms, such as Boosted Tree, Boostrap Forest, or even a simpler Multiple Linear Regression model.

Only you can add a single validation column with a unique hold-out sample.

There is nothing unique about k-fold crossvalidation to XGBoost, so this option should be available for all platforms, to allow a more generalized error metrics across models consistently evaluated across multiple platforms.