- JMP User Community

- :

- Discussions

- :

- Re: Nominal Logistic Regression -- Logistic fit and Prediction Profiler appear o...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Nominal Logistic Regression -- Logistic fit and Prediction Profiler appear opposite

Good evening and thank you in advance for your help!

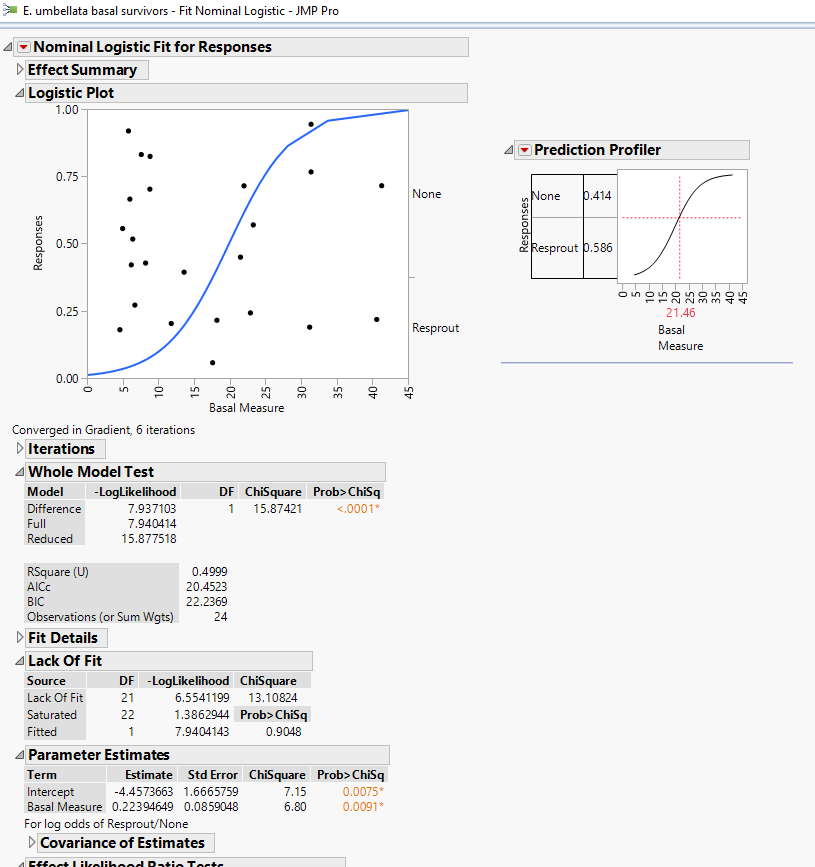

For my masters project, I am attempting to run a nominal logistic regression to show that as the basal diameter of an invasive plant species increases, its likelihood to resprout also increases following treatment of an herbicide. The data certainly seems to show this and the prediction profiler (pictured) seems to support this, but the created graph seems to show the opposite (pictured).

A few questions:

1) Why are my data points all over the place? Shouldn't they be represented as either 1 or 0?

2) Why is response on a continuous scale on the y axis? Is this the predicted percentage? My response variable is shown on the right.

3) Why do the two appear to be opposite? Is there anything I can do?

I tried changing value ordering, but received the same results (opposite curve).

Thank you!

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Nominal Logistic Regression -- Logistic fit and Prediction Profiler appear opposite

Please follow Kevin's excellent advice and read the JMP documentation for the Logistic platform. In the meantime, I will answer your specific questions.

- The mosaic plot at the top of the platform uses the continuous predictor value for the abscissa. It generates a uniform random value for the ordinate such that the point is on the correct side (below or above) the logistic curve. This 'trick' is helpful because it aids your visual assessment of the data versus the fit. It gives you an honest depiction of the distribution and mass of data points that determined the fitted curve.

- The response is the probability of the outcome Responses = Resprout, not the outcome itself. (Many users naively replace categorical levels with numerical codes such as 0 and 1 and use ordinary least squares regression but this approach is unprincipled, problematic, and usually unsatisfying.) The probability is transformed in logistic regression to suit the behavior of probability and the assumptions of regression.

- The probability is bounded over the range [0,1]. The response in linear regression is not bounded at all.

- The probability curve is not linear. It exhibits asymptotes at the extremes of the predictor variable. Linear (in the parameters) regression assumes a linear (in the parameters) relationship. You can add transforms of the predictor (for example, X^2, X^3) to the linear combination but it still won't approximate the probability curve well enough to be practically useful.

- The transformation of the probability occurs in two stages.

- First, the probability of Responses = Resprout is converted to the odds of Responses = Resprout. That is, odds = P( Responses = Resprout ) / P ( not Responses = Resprout ) or odds = P( Response = Resprout ) / (1 - P( Responses = Resprout )). The odds are bounded by [0,+infinity]. This change is a big improvement for linear regression, but not quite enough.

- Second, the odds are converted with the (natural) logarithm function to log( odds ). The bounds of log( odds ) is [-infinity,+infinity]. This last change is the one that satisfies the assumption of an unbounded linear response for the regression analysis.

- The log( odds ) is also known as the logit or logit( P( Resprout ) ).

- The logistic regression model is now log( odds ) = a + bX, a linear function of X, just like any other linear regression. (Note that you could include other predictor variables, interaction effects, and powers as well, if necessary.)

- The log( odds ) are transformed back to probability after the fitting for the sake of plots and interpretation.

- I am not exactly sure what you mean by 'opposite' but let me explain the results shown in your example.

- Examine the right side of the mosaic plot. Notice that the two levels of Responses appear with a tick mark separating them. This scale indicates the marginal probability of the outcomes. The distance from the origin (0) to the tick mark indicates the proportion of the cases with the first level, Responses = Resprout. The distance from the tick mark to the top (1) indicates the proportion of the cases with the second level, Responses = None. If you drew a straight horizontal line from the tick mark on the right side to the probability scale on the left side, it would represent the null hypothesis of regression: the probability of Responses = Resprout does not depend on the predictor Basal Measure.

- Examine the logistic curve. It indicates the conditional probability that Responses = Resprout. It represents the alternative hypothesis of your regression: the probability of Responses = Resprout depends on the predictor Basal Measure. The probability that Responses = Resprout is near zero when Basal Measure is near 5 but this probability rises toward one when Basal Measure is near 40.

In case you are interested, we cover all forms of logistic regression and other methods suitable for categorical responses in our JMP Software: Analyzing Discrete Response course.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Nominal Logistic Regression -- Logistic fit and Prediction Profiler appear opposite

Hi, Casteel!

Good evening to you, too.

Please reference Chapter 11 "Logistic Regression Models" of the Fitting Linear Models book under the Help menu. JMP books in the Help menu are very helpful, and should assist with your confusion regarding responses and interpretation of graphs.

The fitted probabilities of a nominal regression model always sum to 1. Both graphs suggest that when the Basal Measure is low, the Resprout probability is lower; When the Basal Measure is high, the Resprout probability is higher.

Isn't that what you're trying to prove? The graphs (both of 'em) are consistent with that hypothesis.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Get Direct Link

- Report Inappropriate Content

Re: Nominal Logistic Regression -- Logistic fit and Prediction Profiler appear opposite

Please follow Kevin's excellent advice and read the JMP documentation for the Logistic platform. In the meantime, I will answer your specific questions.

- The mosaic plot at the top of the platform uses the continuous predictor value for the abscissa. It generates a uniform random value for the ordinate such that the point is on the correct side (below or above) the logistic curve. This 'trick' is helpful because it aids your visual assessment of the data versus the fit. It gives you an honest depiction of the distribution and mass of data points that determined the fitted curve.

- The response is the probability of the outcome Responses = Resprout, not the outcome itself. (Many users naively replace categorical levels with numerical codes such as 0 and 1 and use ordinary least squares regression but this approach is unprincipled, problematic, and usually unsatisfying.) The probability is transformed in logistic regression to suit the behavior of probability and the assumptions of regression.

- The probability is bounded over the range [0,1]. The response in linear regression is not bounded at all.

- The probability curve is not linear. It exhibits asymptotes at the extremes of the predictor variable. Linear (in the parameters) regression assumes a linear (in the parameters) relationship. You can add transforms of the predictor (for example, X^2, X^3) to the linear combination but it still won't approximate the probability curve well enough to be practically useful.

- The transformation of the probability occurs in two stages.

- First, the probability of Responses = Resprout is converted to the odds of Responses = Resprout. That is, odds = P( Responses = Resprout ) / P ( not Responses = Resprout ) or odds = P( Response = Resprout ) / (1 - P( Responses = Resprout )). The odds are bounded by [0,+infinity]. This change is a big improvement for linear regression, but not quite enough.

- Second, the odds are converted with the (natural) logarithm function to log( odds ). The bounds of log( odds ) is [-infinity,+infinity]. This last change is the one that satisfies the assumption of an unbounded linear response for the regression analysis.

- The log( odds ) is also known as the logit or logit( P( Resprout ) ).

- The logistic regression model is now log( odds ) = a + bX, a linear function of X, just like any other linear regression. (Note that you could include other predictor variables, interaction effects, and powers as well, if necessary.)

- The log( odds ) are transformed back to probability after the fitting for the sake of plots and interpretation.

- I am not exactly sure what you mean by 'opposite' but let me explain the results shown in your example.

- Examine the right side of the mosaic plot. Notice that the two levels of Responses appear with a tick mark separating them. This scale indicates the marginal probability of the outcomes. The distance from the origin (0) to the tick mark indicates the proportion of the cases with the first level, Responses = Resprout. The distance from the tick mark to the top (1) indicates the proportion of the cases with the second level, Responses = None. If you drew a straight horizontal line from the tick mark on the right side to the probability scale on the left side, it would represent the null hypothesis of regression: the probability of Responses = Resprout does not depend on the predictor Basal Measure.

- Examine the logistic curve. It indicates the conditional probability that Responses = Resprout. It represents the alternative hypothesis of your regression: the probability of Responses = Resprout depends on the predictor Basal Measure. The probability that Responses = Resprout is near zero when Basal Measure is near 5 but this probability rises toward one when Basal Measure is near 40.

In case you are interested, we cover all forms of logistic regression and other methods suitable for categorical responses in our JMP Software: Analyzing Discrete Response course.

- © 2024 JMP Statistical Discovery LLC. All Rights Reserved.

- Terms of Use

- Privacy Statement

- About JMP

- JMP Software

- JMP User Community

- Contact